The goal of web data collection is to extract information that is originally scattered and difficult to use directly, and organize it into a useful data format, which is then used to answer business questions, enhance algorithms, and compete with other companies.

How to easily and precisely collect data from websites?

This blog will introduce you 5 best web data collection tools. Begin reading and get the best!

Top 5 Web Data Collection Tools

#1. Scrapeless: A comprehensive data collector.

#2. Mention: A useful news monitoring and keyword reminder tool.

#3. SurveyMonkey: Easily collect customer, employee and market insights.

#4. Lead411: Accurate sales intelligence platform.

#5. Magpi: A fully functional mobile-first data collection system.

What Is Web Data Collection?

Web Data Collection, also known as web scraping or data crawling, refers to the process of extracting structured or unstructured data from the Internet through automated tools.

Web data collection usually uses crawlers to simulate users visiting websites and extract the required data by parsing web page content.

For example, product prices, inventory information, and user reviews of e-commerce platforms can be collected, or trending topics and user interaction data on social media can be collected. This data can then be used in a variety of scenarios such as market research, competitive analysis, business decisions, SEO optimization, or artificial intelligence training models.

What Do Businesses Hope to Achieve through Web Data Collection?

Web data collection enables businesses to leverage the vast amount of information available online to gain actionable insights and drive strategic decisions.

By systematically collecting and analyzing this data, businesses aim to achieve several key goals:

- Market analysis and trend forecasting

Businesses use web data to monitor industry trends, consumer preferences, and market demands. This helps them stay ahead of competitors by adapting to emerging trends and tailoring their products or services accordingly.

- Competitor intelligence

By collecting data from competitor websites (e.g., pricing, product offerings, and marketing strategies), companies can identify gaps in the market, optimize their own strategies, and maintain a competitive advantage.

- Customer insights

Web data collection enables businesses to analyze customer behavior, reviews, and feedback. This helps understand consumer pain points, preferences, and expectations, ultimately increasing customer satisfaction and loyalty.

- Dynamic pricing strategies

E-commerce platforms and retailers use real-time web scraping to track competitor pricing and dynamically adjust their own prices, ensuring they remain competitive while maximizing profit margins.

- Content optimization

Businesses collect data on popular keywords, trending topics, and audience engagement metrics to optimize the SEO of their content and increase its online visibility.

- Risk management

Companies use network data collection to monitor potential risks, such as regulatory changes, reputation issues, or supply chain disruptions. This enables them to take proactive measures and effectively mitigate risks.

- AI and machine learning data

Enterprises collect large datasets to train AI models and enhance machine learning algorithms. For example, scraping image, text, or language data helps improve AI-based solutions such as recommendation systems or predictive analytics.

5 Best Tools for Web Data Collection

Criteria for Evaluation

Provide transparency about the ranking methodology. Some criteria could include:

Efficiency: Speed and accuracy of data collection.

Anti-blocking features: Ability to bypass anti-scraping measures.

User experience: Ease of use, intuitive UI, and setup time.

Compatibility: Supported languages, platforms, and integrations.

Cost-effectiveness: Value for money based on features and pricing.

Legal compliance: Adherence to data privacy laws like GDPR and CCPA.

#1. Scrapeless

Scrapeless stands out as the top tool for web data collection, offering unparalleled reliability, affordability, and ease of use. Designed to meet the needs of modern data scraping, Scrapeless combines cutting-edge technology with a suite of integrated features, making it an all-in-one solution for any data collection challenge.

Why Do More than 2 Thousand Businesses Use Scrapeless for Data Collecting?

Affordable Pricing: Scrapeless is designed to offer exceptional value.

Stability and Reliability: With a proven track record, Scrapeless provides steady API responses, even under high workloads.

High Success Rates: Say goodbye to failed extractions and Scrapeless promises 99.99% successful access to web data.

Scalability: Handle thousands of queries effortlessly, thanks to the robust infrastructure behind Scrapeless.

What sets Scrapeless apart is its impressive stability and high success rate, ensuring smooth and uninterrupted operations. Its cost-effective pricing makes it accessible to businesses of all sizes, while its user-friendly interface allows even non-technical users to get started effortlessly. Moreover, Scrapeless is recognized for its quick response times, providing seamless performance across various scraping scenarios.

The platform's true power lies in its integrated features: a web unlocker, scraping browser, scraping API, CAPTCHA solver, and built-in proxies, all of which work together to handle complex web scraping tasks with ease. Scrapeless employs advanced anti-detection technology to avoid 99.99% anti-bot detection and network restrictions, offering users a reliable and efficient solution for bypassing the toughest barriers.

#2. Mention

Mention is a media monitoring platform that allows startups to track brand mentions and sentiment across the web. Features include news monitoring, keyword alerts, and influencer discovery.

Mention enables small startups to stay on top of online conversations about their brand with an easy-to-use and affordable monitoring solution. Insights helps teams engage with prospects and influencers.

#3. SurveyMonkey

SurveyMonkey provides startups with an easy-to-use online survey platform for collecting customer, employee, and market insights. Features include survey building, distribution, analytics tools, and integrations.

SurveyMonkey enables early-stage companies to create and manage feedback surveys without extensive expertise. Affordable plans offer powerful features and support.

#4. Lead411

Lead411 offers a sales intelligence platform tailored for startups looking to grow their pipeline. Key features include lead and company data, email lookup tools, and real-time alerts.

Lead411 provides sales teams with an easy way to identify leads and enhance outbound marketing campaigns. Competitive entry-level pricing removes barriers to early growth.

#5. Magpi

Magpi is a mobile-first data collection system tailor-made for startups and small research teams. Features include forms, surveys, offline data capture, analytics, and dataset management.

Magpi provides organizations with a way to collect insights in the field without requiring extensive in-house expertise. The Basic plan offers advanced features to support a variety of use cases.

Scraping APIs: the Best Method of Web Data Collection

Many websites and platforms offer APIs that allow developers to access specific data in a structured format. APIs are reliable, efficient, and often include real-time updates. Examples include the Twitter API, Google SERP API, and e-commerce APIs.

However, they may have limitations such as rate limits or limited data access, and are often more expensive than APIs directly provided by websites.

Fortunately, some third-party scraping APIs are affordable and have a high degree of stability and success (such as Scrapeless).

Scrapeless offers a reliable and scalable web scraping platform at competitive prices, ensuring excellent value for its users:

Scraping Browser: From $0.09 per hour

Scraping API: From $0.80 per 1k URLs

Web Unlocker: $0.20 per 1k URLs

Captcha Solver: From $0.80 per 1k URLs

Proxies: $2.80 per GB

By subscribing, you can enjoy discounts of up to 20% discount on each service. Do you have specific requirements? Contact us today, and we'll provide even greater savings tailored to your needs!

Let's figure out why Scrapeless Scraping API is effective for data collection. Please follow my steps to scrape Google Search data.

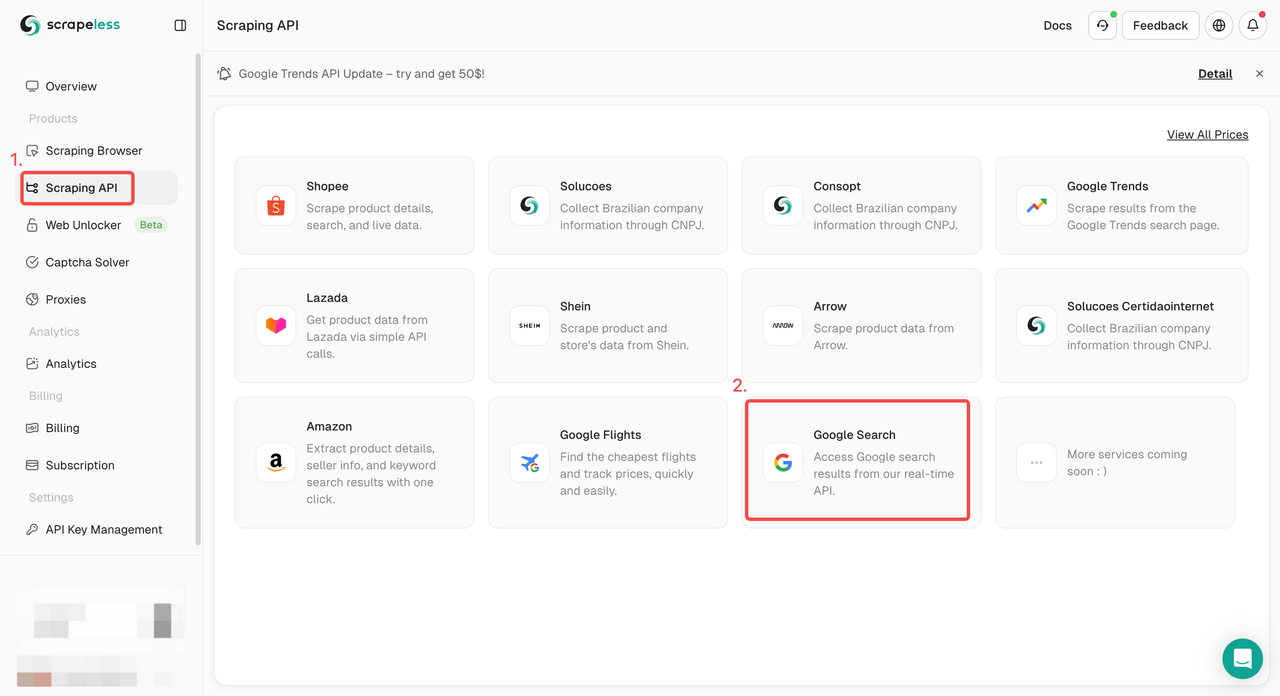

Step 1. Log into the Scrapeless Dashboard and go to "Google Search API".

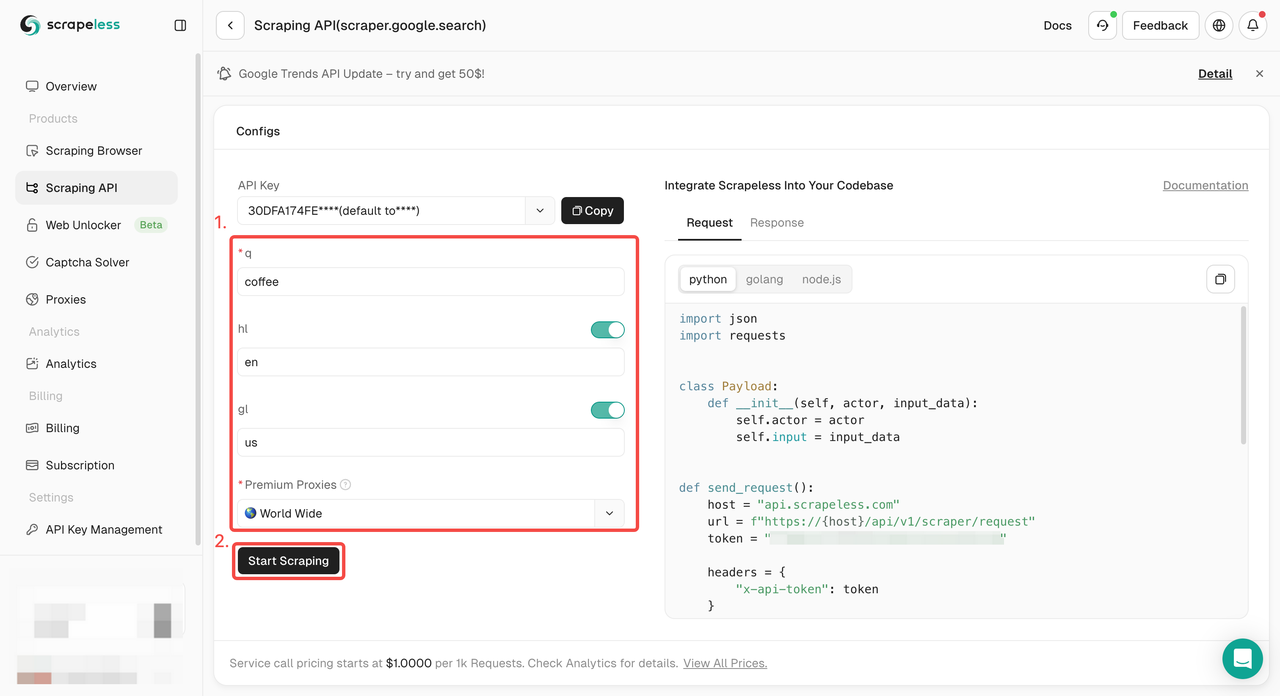

Step 2. Configure the keywords, region, language, proxy and other information you need on the left. After making sure everything is OK, click "Start Scraping".

q: Parameter defines the query you want to search for.gl: Parameter defines the country to use for Google search.hl: Parameter defines the language to use for Google search.

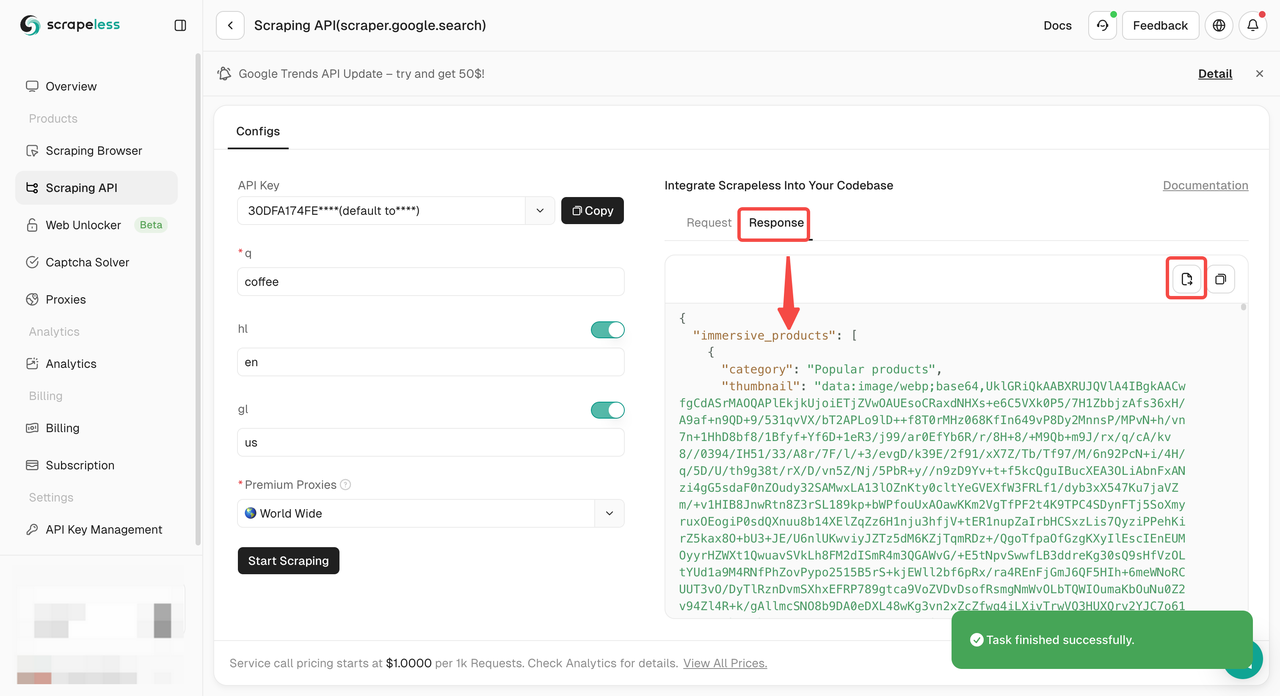

Step 3. Get the crawling results and export them.

Just need sample code to integrate into your project? We’ve got you covered! Or you can visit our API documentation for any language you need.

- Python:

import http.client

import json

conn = http.client.HTTPSConnection("api.scrapeless.com")

payload = json.dumps({

"actor": "scraper.google.search",

"input": {

"q": "coffee",

"hl": "en",

"gl": "us"

}

})

headers = {

'Content-Type': 'application/json'

}

conn.request("POST", "/api/v1/scraper/request", payload, headers)

res = conn.getresponse()

data = res.read()

print(data.decode("utf-8"))

- Golang

package main

import (

"fmt"

"strings"

"net/http"

"io/ioutil"

)

func main() {

url := "https://api.scrapeless.com/api/v1/scraper/request"

method := "POST"

payload := strings.NewReader(`{

"actor": "scraper.google.search",

"input": {

"q": "coffee",

"hl": "en",

"gl": "us"

}

}`)

client := &http.Client {

}

req, err := http.NewRequest(method, url, payload)

if err != nil {

fmt.Println(err)

return

}

req.Header.Add("Content-Type", "application/json")

res, err := client.Do(req)

if err != nil {

fmt.Println(err)

return

}

defer res.Body.Close()

body, err := ioutil.ReadAll(res.Body)

if err != nil {

fmt.Println(err)

return

}

fmt.Println(string(body))

}

Why Are More and More Companies Using Data Collection Tools?

Improved Efficiency and Productivity: Data creates a crucial feedback loop for organizations. For example, a company in the ad tech industry can use web data to automatically validate ad copy, link placement, and images, ensuring that the correct ad reaches the right audience, eliminating manual checks and optimizing results. 📈

Faster and More Effective Decision-Making: Real-time web data collection enables companies to make key, instant decisions. For instance, investment firms can collect data on stock trading volume or social sentiment to make better buy/sell decisions. 💡

Better Financial Performance: Companies can improve profitability by analyzing web traffic, keywords, and search trends, leading to better product and brand positioning and more targeted lead generation. 💰

Identifying and Creating New Product and Service Revenue: Through data-driven market research, companies can enhance profitability. For example, a company analyzing competitor landscapes might identify unmet consumer needs through consumer reviews and feedback. 📊

Improved Customer Experience: Businesses can use web data for website and user experience testing, ensuring that ads, content, and applications perform as expected, based on geographic user data. 🌐

Competitive Advantage: Web data allows companies to gain a competitive edge by comparing real-time pricing and package offers. The travel industry is a great example, where online travel agencies (OTAs) use data collection to create dynamic pricing strategies, undermining competitors. 🏆

Find Your Best Data Collection Tool!

Whether it's surveying a site or compiling a compliance report, these web scraping tools can help you easily collect the information you need from the right people. Each of the five tools in this article has a different application scenario.

However, to avoid repeated selection and calling, you can use Scrapeless directly! It is a powerful data collection toolkit. With its advanced AI tools and JS Redering, you can easily and accurately obtain the data you need.